AI Ethics

Artificial Intelligence (AI) is no longer a futuristic concept—it’s embedded in our lives, from the way we shop online to how we diagnose diseases. As AI systems grow more powerful, so do the ethical questions surrounding their design, deployment, and impact. These questions are not just academic—they’re fundamental to how we shape a future that is fair, inclusive, and aligned with human values.Navigating

Why AI Ethics Matters

AI has the potential to bring enormous benefits. It can streamline operations, drive scientific breakthroughs, and improve quality of life. But without ethical guardrails, it can also amplify bias, undermine privacy, and make decisions with real-world consequences—often without accountability.

Navigating the Future: The Imperative of AI Ethics

Imagine an algorithm used in hiring that filters out qualified candidates based on gender or ethnicity, or a predictive policing system that disproportionately targets marginalized communities. These are not hypotheticals; they are real-world examples of how AI can go wrong when ethics aren’t a priority.

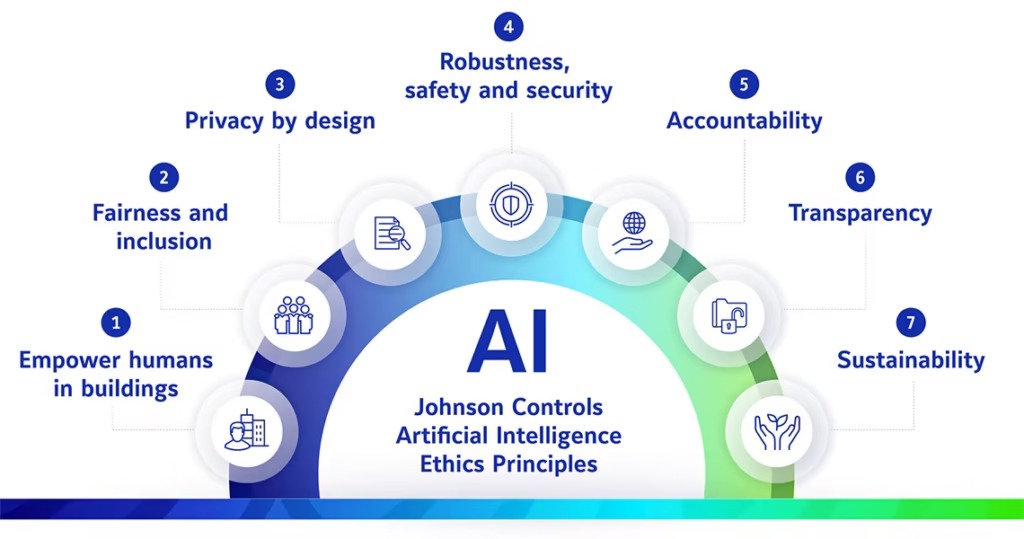

Key Principles of Ethical AI

To ensure AI serves humanity, several ethical principles have emerged as foundational:

1. Transparency

AI systems should be understandable and explainable. Users should know how decisions are made—especially when those decisions affect people’s lives.

2. Fairness

Algorithms must be designed to prevent bias and discrimination. This requires diverse data, inclusive teams, and ongoing audits of AI behavior.

3. Accountability

Developers and organizations must be responsible for the outcomes of the AI systems they build. There must be clear mechanisms for redress when harm occurs.

4. Privacy

AI must respect user privacy and data protection rights. This includes clear consent, minimal data collection, and robust security measures.

5. Safety

AI should be tested rigorously to ensure it behaves as expected—even in unpredictable environments. This includes protecting against misuse or malicious applications.

The Role of Regulation

Governments and institutions are beginning to act. The European Union’s AI Act, for example, aims to classify and regulate AI applications based on risk. Similar efforts are underway globally. But regulation must strike a balance: protecting people while fostering innovation.

Industry self-regulation and ethical AI frameworks are a good start—but not enough on their own. Collaboration between tech companies, academia, civil society, and policymakers is essential to create standards that are both effective and adaptable.

The Human Factor

Ultimately, ethics in AI is not just about machines—it’s about people. It’s about the kind of world we want to build and the values we want our technology to reflect. Developers, designers, and decision-makers must recognize that every line of code carries ethical weight.

As we move into a future increasingly shaped by artificial intelligence, we must ask not only what AI can do, but what it should do.

Navigating the Future: The Imperative of AI Ethics